Optimizing the Traveling Salesman Problem: Integrating Graph Neural Networks With Branch and Bound Algorithms

Learn how integrating Graph Neural Networks (GNNs) with the Branch & Bound (B&B) algorithm significantly advances solving the Traveling Salesman Problem (TSP).

Join the DZone community and get the full member experience.

Join For FreeThe Traveling Salesman Problem (TSP) is one of the challenging problems in operations research. Basically, TSP is about optimizing the route for the salesperson to efficiently visit the list of cities and return to the starting point. Although the problem's premise is simple, its computational complexity makes it a significant focus for both theoretical studies and practical implementations.

Understanding Traveling Salesman Problem's Complexity

The primary challenge of the TSP stems from its combinatorial nature. If a salesman visits n cities, they must evaluate (n−1)!/2 possible routes due to the flexibility in choosing the starting point and reversing the route. This factorial increase means that even a small number of cities results in a massive number of possible routes. For instance, with 20 cities, the salesman must consider about 60 billion routes. This phenomenon, termed a "combinatorial explosion," makes brute force methods impractical for large datasets.

Solving Traveling Salesman Problem With Branch and Bound

The branch and bound algorithm widely solves optimization problems like the Traveling Salesman Problem. It systematically evaluates all possible alternatives to find the optimal solution, while simultaneously excluding large parts of the search space that are unlikely to produce the best solution.

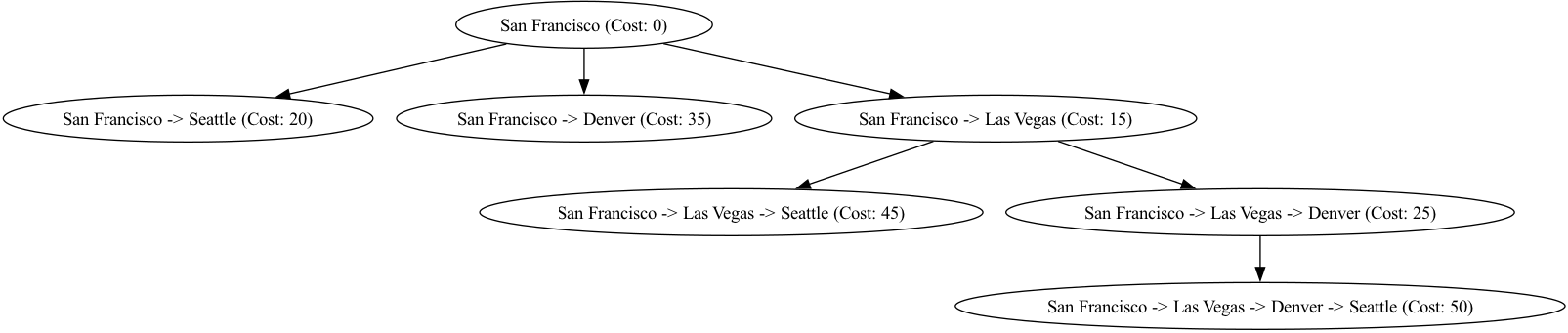

Representation of the Problem as a Decision Tree

In the context of TSP, the problem can be visualized as a decision tree where each node represents a partial route taken by the salesperson. The root of the tree is the starting city, and each branch from a node represents a possible next city to visit, extending the partial route.

For example, a salesman starts in San Francisco. The root node represents San Francisco. From there, the salesman might choose to visit Seattle, Denver, or Las Vegas next, each forming a new branch in the decision tree.

Bounding

At each node in the decision tree, the algorithm calculates a lower bound on the possible cost of completing the route from that node. This lower bound is an estimate of the minimum additional cost required to complete the tour starting from the partial route represented by the node.

For example, if the salesman has already traveled from San Francisco to Seattle, the algorithm calculates the least cost required to visit the remaining cities (Denver and Las Vegas) and return back to San Francisco. This estimation helps in determining whether to explore further from the current node.

Branching

The algorithm extends the current node by generating its children, where each child node represents a new partial route that includes one additional city not yet visited. This step breaks the problem into smaller subproblems that can be explored independently.

Continuing the example, if the salesman has traveled from San Francisco to Seattle, the next branching might include routes from Seattle to Denver or Seattle to Las Vegas. These new branches further extend the decision tree.

Pruning

To optimize the search process, the algorithm compares the lower bound of each node with the cost of the optimal solution found so far. If the lower bound of a node is greater than or equal to the cost of the current optimal solution, then that node and all its child nodes are pruned and not explored further. This technique significantly reduces the number of nodes that need to be explored, thereby narrowing down the search space.

For example, suppose the current best solution has a cost of 25. If the lower bound calculated for the route San Francisco → Seattle → Denver is 30, then this route and its extensions can be pruned because they cannot yield a better solution than 25.

Exploring Nodes

The algorithm continues to explore the remaining nodes (those not pruned), repeating the branching and bounding process. It keeps track of the best solution found during the search. When all nodes are either pruned or explored, the best solution at that point is the optimal solution.

Example

Consider a simple TSP with four cities: San Francisco (A), Seattle (B), Denver (C), and Las Vegas (D).

Initial Step

- Start at city A (San Francisco).

- Calculate the lower bound for the initial state using heuristic estimates or other methods.

Branching from A

- Generate branches for possible next cities: B (Seattle), C (Denver), and D (Las Vegas).

Calculating Bounds

- For each branch, calculate the lower bound on the cost to complete the tour. For example, the branch from A to B might have a lower bound that includes the minimum costs of visiting the remaining cities and returning to A.

Pruning

- Suppose the branch A → B has a lower bound of 20, A → C has a lower bound of 35, and A → D has a lower bound of 15.

- If the current best solution has a cost of 15, prune branches A → B and A → C because their bounds exceed 15.

Exploring Remaining Nodes

- Continue exploring from A → D. From D, branch out to C and B, and repeat the bounding and pruning process until all possibilities are either explored or pruned.

By systematically applying these steps, the branch and bound method effectively narrows down the search space and finds the optimal solution without having to examine every possible route exhaustively. This is particularly powerful for problems like TSP, where the number of possible solutions grows factorially with the number of cities.

Pseudo Code for Branch and Bound TSP

Definitions

distance_matrix: A 2D array representing the distances between city pairscities: A list of city namesbest_tour: The best route foundbest_cost: The cost of the best route foundstack: A data structure for depth-first search (DFS)

Functions

calculate_lower_bound(tour): Calculates the lower bound on the cost to complete the tour from a partial route

Algorithm

def branch_and_bound_tsp(distance_matrix):

# Initialize best_tour as None

best_tour = None

# Initialize best_cost as infinity

best_cost = float('inf')

# Initialize stack with root node (0,) and cost 0

stack = [((0,), 0)]

decision_tree = []

while stack:

tour, cost = stack.pop()

if len(tour) == len(distance_matrix):

cost += distance_matrix[tour[-1]][tour[0]] # Complete the tour

if cost < best_cost:

best_cost = cost

best_tour = tour

else:

remaining_cities = [i for i in range(len(distance_matrix)) if i not in tour]

for city in remaining_cities:

new_tour = tour + (city,)

new_cost = cost + distance_matrix[tour[-1]][city]

lower_bound = calculate_lower_bound(distance_matrix, new_tour)

if lower_bound < best_cost:

stack.append((new_tour, new_cost))

# Add node to decision tree: new_tour with label showing cities and cost

decision_tree.append((new_tour, new_cost))

# Add edge to decision tree: from tour to new_tour

return best_tour, best_cost, decision_tree

def calculate_lower_bound(distance_matrix, tour):

bound = 0

remaining_cities = [i for i in range(len(distance_matrix)) if i not in tour]

# Add edges already in the tour

for i in range(1, len(tour)):

bound += distance_matrix[tour[i-1]][tour[i]]

# Estimate the cost to complete the tour

if remaining_cities:

bound += min(distance_matrix[tour[-1]][city] for city in remaining_cities)

for city in remaining_cities:

if len(remaining_cities) > 1:

bound += min(distance_matrix[city][i] for i in remaining_cities if i != city)

else:

bound += distance_matrix[city][tour[0]] # Include return to start city

return bound

# Example distance matrix and cities

distance_matrix = [

[0, 20, 35, 15], # San Francisco

[20, 0, 25, 30], # Seattle

[35, 25, 0, 10], # Denver

[15, 30, 10, 0] # Las Vegas

]

cities = ['San Francisco', 'Seattle', 'Denver', 'Las Vegas']

best_tour, best_cost, decision_tree = branch_and_bound_tsp(distance_matrix)

# Output the best tour and its cost

print(f"Best tour: {' -> '.join(cities[i] for i in best_tour)}")

print(f"Cost of the best tour: {best_cost}")

Complexity of the Branch and Bound Algorithm

While the branch and bound algorithm is a powerful tool, it faces significant challenges when applied to the Traveling Salesman Problem.

Time Complexity

In the worst-case scenario, the time complexity of the branch and bound algorithm is factorial, denoted as O(n!), where n represents the number of cities. The number of possible routes grows factorially with the number of cities. For example, with just four cities San Francisco (A), Seattle (B), Denver (C), and Las Vegas (D) there are 4! (24) possible routes to evaluate. This number becomes unmanageable as the number of cities increases.

High Computational Cost

Evaluating and pruning branches in the search tree requires significant computational resources. As the number of cities increases, the complexity and the time required to find the optimal solution will also increase.

Inefficient Pruning

B&B relies on heuristic methods to estimate lower bounds for pruning non-promising branches. If these heuristics are not effective, the algorithm may explore many unnecessary branches, further increasing computational time.

Graph Neural Networks (GNN)

To overcome the constraints of traditional methods, recent progress in machine learning, especially with Graph Neural Networks (GNNs), has presented a promising solution. GNNs leverage the intrinsic structure of graph data, introducing innovative techniques that improve upon existing algorithms like the Branch and Bound (B&B) method. By using the representational capabilities of graphs, GNNs can effectively model complex relationships, thereby enhancing computational efficiency and helping in more informed decision-making processes.

Enhanced State Representation through Graph Encoding

Graph Neural Networks (GNNs) can transform the TSP graph, where cities are represented as nodes and distances as edges, into high-dimensional embeddings. These embeddings effectively capture the complex relationships between cities, offering a richer representation than traditional methods. For instance, for cities like San Francisco, Seattle, Denver, and Las Vegas, GNNs can generate embeddings that reflect both distances and the overall topology, providing a more detailed state representation.

Improved Heuristics for Initialization

Starting the B&B algorithm with nearly optimal heuristic solutions can significantly speed up the process. GNNs can predict these initial solutions by using learned embeddings to estimate more accurate starting points or bounds.

For example, a GNN trained on numerous TSP instances may learn that starting a route from San Francisco to Denver might be more efficient than other initial routes, even if raw distance data suggests otherwise.

Better Decision-Making in Dynamic Branch Selection

Choosing promising branches in the B&B process is vital for efficiency. GNNs help by providing embeddings that predict the potential success of branching decisions, focusing on more likely optimal branches and reducing the search space.

For example, embeddings might indicate that a branch from Seattle to Denver holds more promise due to better intermediary connections or lower overall distance than a branch from Seattle to Las Vegas. This allows the B&B algorithm to prioritize more strategic routes.

Efficient Pruning With Enhanced Bounding

GNNs refine the bounding process in B&B by generating accurate heuristic estimates, enabling tighter and more effective bounds.

For a partial route from San Francisco to Seattle, a GNN can provide a sophisticated estimate of the lower bound for completing the tour. This bound is based on an intricate understanding of the entire network's topology and distances, allowing the B&B algorithm to discard non-optimal paths more effectively.

Pseudo Code for Integrating GNN With Branch and Bound for TSP

def GNN_encode(distance_matrix):

GNN_model = initialize_GNN_model()

graph = convert_to_graph(distance_matrix)

embeddings = GNN_model.generate_embeddings(graph)

return embeddings

def convert_to_graph(distance_matrix):

graph = create_empty_graph()

for i in range(len(distance_matrix)):

for j in range(len(distance_matrix)):

if i != j:

add_edge(graph, i, j, distance_matrix[i][j])

return graph

def initialize_GNN_model():

return create_GNN_model(layers, activations, learning_rate)

def integrate_gnn_with_branch_and_bound_tsp(distance_matrix):

best_tour, best_cost = None, float('inf')

stack = [((0,), 0)]

embeddings = GNN_encode(distance_matrix)

while stack:

tour, cost = stack.pop()

if len(tour) == len(distance_matrix):

complete_cost = cost + distance_matrix[tour[-1]][tour[0]]

if complete_cost < best_cost:

best_tour, best_cost = tour, complete_cost

else:

remaining_cities = [i for i in range(len(distance_matrix)) if i not in tour]

for city in remaining_cities:

new_tour = tour + (city,)

new_cost = cost + distance_matrix[tour[-1]][city]

lower_bound = calculate_lower_bound(new_tour, embeddings)

if lower_bound < best_cost:

stack.append((new_tour, new_cost))

return best_tour, best_cost

def calculate_lower_bound(tour, embeddings):

bound = sum(distance_matrix[tour[i-1]][tour[i]] for i in range(1, len(tour)))

remaining_cities = [i for i in range(len(distance_matrix)) if i not in tour]

if remaining_cities:

bound += min(distance_matrix[tour[-1]][city] for city in remaining_cities)

bound += sum(min(distance_matrix[city][i] for i in remaining_cities if i != city)

for city in remaining_cities)

bound += distance_matrix[remaining_cities[-1]][tour[0]]

return bound

# Example distance matrix and cities

distance_matrix = [

[0, 20, 35, 15], # San Francisco

[20, 0, 25, 30], # Seattle

[35, 25, 0, 10], # Denver

[15, 30, 10, 0] # Las Vegas

]

cities = ['San Francisco', 'Seattle', 'Denver', 'Las Vegas']

# Run the integrated algorithm

best_tour, best_cost = integrate_gnn_with_branch_and_bound_tsp(distance_matrix)

# Output the best tour and its cost

print(f"Best tour: {' -> '.join(cities[i] for i in best_tour)}")

print(f"Cost of the best tour: {best_cost}")Future Scope and Prospects

Combining GNNs with Reinforcement Learning (RL) can further improve the decision-making process in the B&B algorithm. RL can dynamically adjust strategies for exploring and pruning branches based on real-time feedback, leading to even more efficient solutions. Over time, RL agents can learn from past experiences and enhance their strategies, adapting to different instances of TSP and other optimization problems.

Conclusion

Integrating Graph Neural Networks (GNNs) with the Branch & Bound (B&B) algorithm represents a significant advancement in solving the Traveling Salesman Problem (TSP). This hybrid approach effectively addresses the scalability and efficiency limitations of traditional methods, paving the way for tackling various complex combinatorial challenges. As research continues, the synergy between GNNs, B&B, and other cutting-edge optimization techniques is expected to yield robust solutions for increasingly intricate and dynamic problems, fostering innovative applications across diverse fields.

Opinions expressed by DZone contributors are their own.

Comments